embracing craziness with discipline | my dive into Google Ads

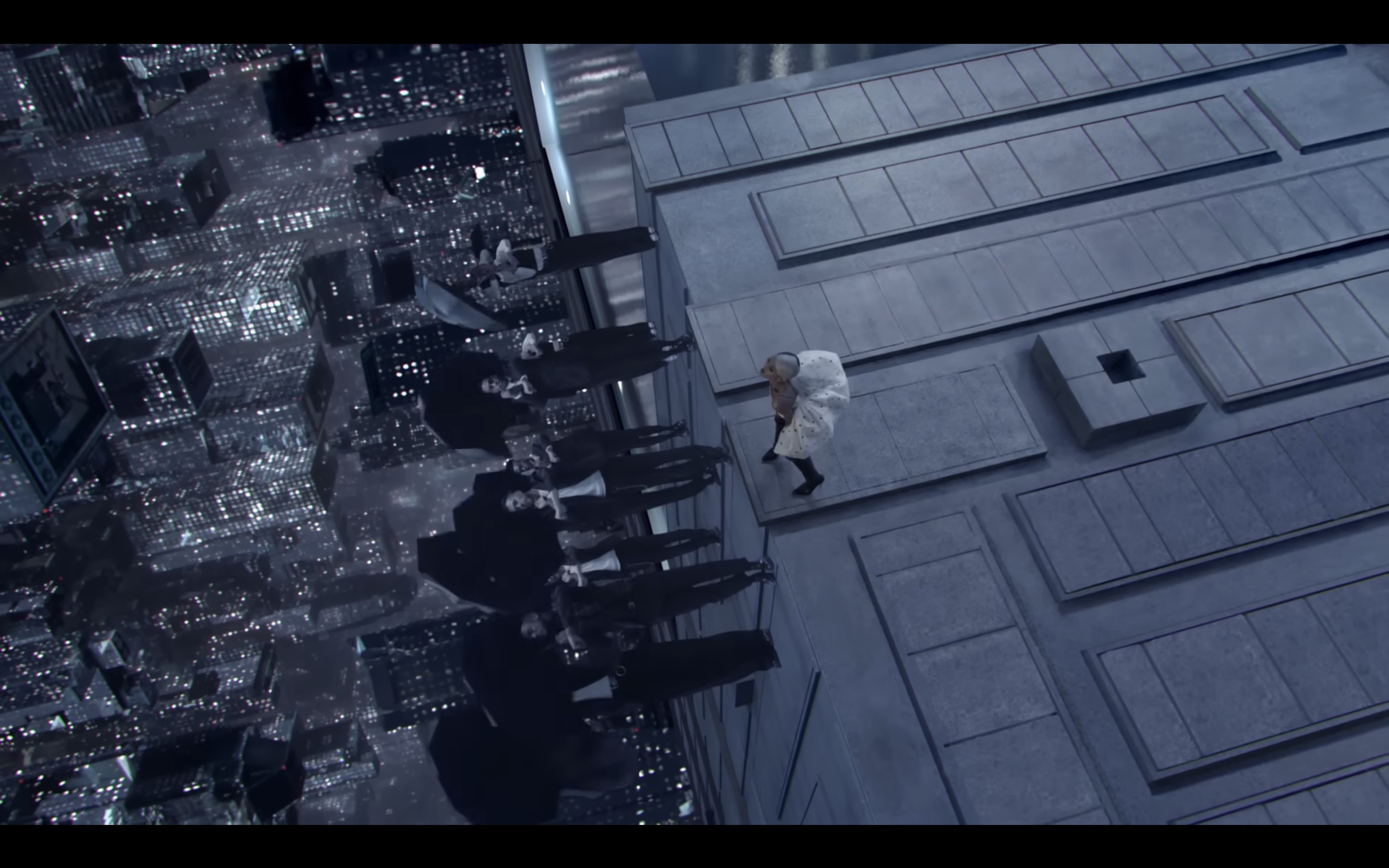

In the spirit of Karl Lagerfeld who once said, “I like the idea of craziness with discipline,” it all started with an assignment from my professor about Google’s cookie and privacy policies. I was supposed to research how cookies influence ad targeting and how collected privacy data is processed, stored and used. But reading about it from all sources wasn’t barely enough for my appetite—so, I turned to something even more exhiliarting: ...